It has been too long since I last posted so I decided to show off some of what we've been working on at Kelman Technologies.

The screen shots show off the IPE (Interactive Processing Environment). It is our latest generation of seismic processing tools based on Eclipse RCP and other Eclipse projects. Before you get your mouse in a knot this isn't a sales pitch. The IPE is not for sale. We use open source tools to turn our intellectual property into tools for "in house" use.

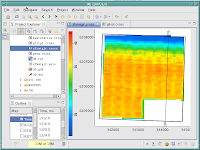

The IPE is the front end to our geophysical processing system. We use it to help our clients find and enhance oil and gas reserves around the world. Developing software for this industry is a challenge because of the immense amount of data that needs to be managed and processed. We deal with extremely large computer clusters, terabyte sized disk farms, exabyte sized tape systems and insane visualization requirements. There are very few industries in the world that have the same high performance computing needs as the oil and gas industry.

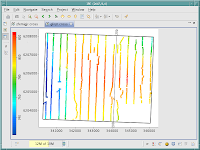

Cross plots have been a big challenge. How do you visualize 20 million or more data points? We have tried a number of different scientific plotting libraries that have all failed to handle the large amount of data points. Early 2008 we are starting work on our own plotting library to replace SGT which replaced FreeHEP.

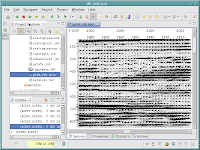

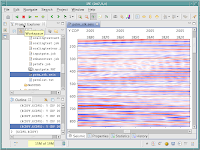

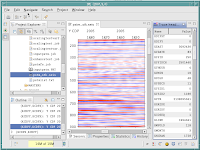

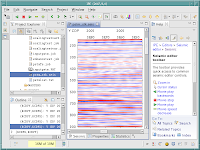

This is what the earth looks like to a geophysicist. A seismic plot can easily be 3 feet by 20 feet long on paper. Convert that to a usable image on the screen and you have a 12,000 by 23,000 pixel image. That is 276,000,000 pixels or about 828 megabytes of memory. Our users expect to have dozens of these images open at one time. Libraries like Java Advanced Imaging were never designed for this sort of work load.

You are seeing seismic data plotted as wiggles and as a variable density plot. Seismic data files can be as large at 350 gigabytes with the requirement that any seismic trace be retrieved for immediate viewing. SWT has been surprising good at keeping up with the performance demands.

We used BIRT for our spectral analysis editor. It made for a fast development cycle but the business oriented charting isn't up to plotting thousands of data points. The guys working on BIRT have been very responsive to our requests but it's not a high performance scientific charting API. Work on a replacement starts in 2008. We'll still use BIRT for our management related reports.

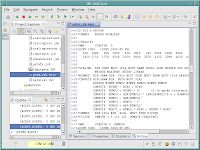

The seismic data keeps its own processing history. It's an audit trail of how the raw seismic field data was processed into a final client ready dataset. It can take hundreds of processing steps and months of CPU time to generate one seismic dataset. The history alone for a single file can reach 100 gigabytes. Being able to create our own custom IDocument saves us from loading the whole file into memory.

Quality control of the seismic data is another big challenge. The volume of data makes it impossible for a human to inspect all of the project data.

Managing the volume of files has been in nightmare in the past. I've been impressed with how well the resources plugin combined with the Common Navigator Framework has been able to keep up with our demands.

The Eclipse User Assistance API has been used heavily. We have offices all around the world so we have to provide easy access to good quality help documents. We are using Camtasia to create built in videos. I don't want to get a call from our users in Libya at two in the morning.

We've written a smart job deck editor that has all of the features of the Java editor but it works with our proprietary processing language called kismet. Our seismic processors are a bit like software programmers. They write job decks that tell our seismic processing system how to manipulate the seismic data.

Our clients send data in a bewildering array of data formats. Using our standard database editor has reduced the confusion experienced by our users. As new formats appear we create a new translator and plug it into the IPE. All of our plotting plugins can then take advantage of the new data.

Kismet jobs can report errors and warning. We have our own kismet builder/nature. We get the best of the Eclipse IDE for our users who have no programming experience. Editor user assistance, hover help, and other great built in features makes it the editor of choice.

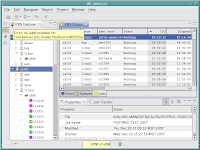

We used iBatis to access our PostgreSQL job tracker server. It's an unorthodox use of iBatis (the IPE is not web based) but it makes for a fast development cycle and easy maintenance. Our users can monitor a job on any cluster computer from the comfort of their desk.

For those times when our client sends us data in a mystery format with no file extension. We can load any binary file into our binary editor. We even have an ascii table view to help with conversions from hex to octal to decimal to ascii. I wonder if we'll need a slider ruler view?

Another sore point I have with the available image libraries. The seismic industry image standard is TIFF on steroids. We can't load a typical TIFF image into an SWT Image object because it will use over 2 gigabytes of memory. We're still working out performance kinks on this one. I'll be looking at Batik and libTiff in 2008. The SWT TIFF support wasn't designed with us in mind.

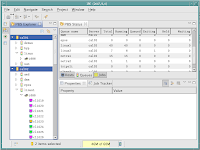

Our front end to the Portable Batch System (PBS) developed by NASA back in the early 1990s.

We use PBS to manage our computer clusters. Users can submit hundreds or thousands of processing jobs with a simple click of a button. It's grid computing on steroids.

The processing requirements vary depending on the state of the seismic data. We still have to manually configure our computers for peek performance. That is why we have so many different processing queues. I know it sounds like we're in the age of ENIAC but we still haven't found computer hardware that is flexible enough to handle all our high performance processing needs. Heck, we're looking at moving our processing system onto GeForce graphics cards to squeeze out every last FLOP.

Job, jobs and more jobs. Managing thousands of jobs that could run for weeks is getting easier. Using an Eclipse view to filter and sort jobs has made life a little less stressful for our users. More advanced tools are in the works for 2008 and 2009 as we try to figure out better ways for our cluster computers to manage themselves.

Bless the wiki. We use it more and more to keep our internal users informed. The Eclipse platform allows us to search our internal doc, our wiki, and our Eclipse based info center with the click of one button. It's great.

We are pushing the Eclipse RCP to extremes that I know were never envisioned by the Eclipse team back in the 2001. Although we've found a few performance weaknesses we've never regretted our decision to adopt Eclipse RCP.

1 comment:

Cool stuff!

I believe you're speaking at EclipseCon, is that correct?

Post a Comment